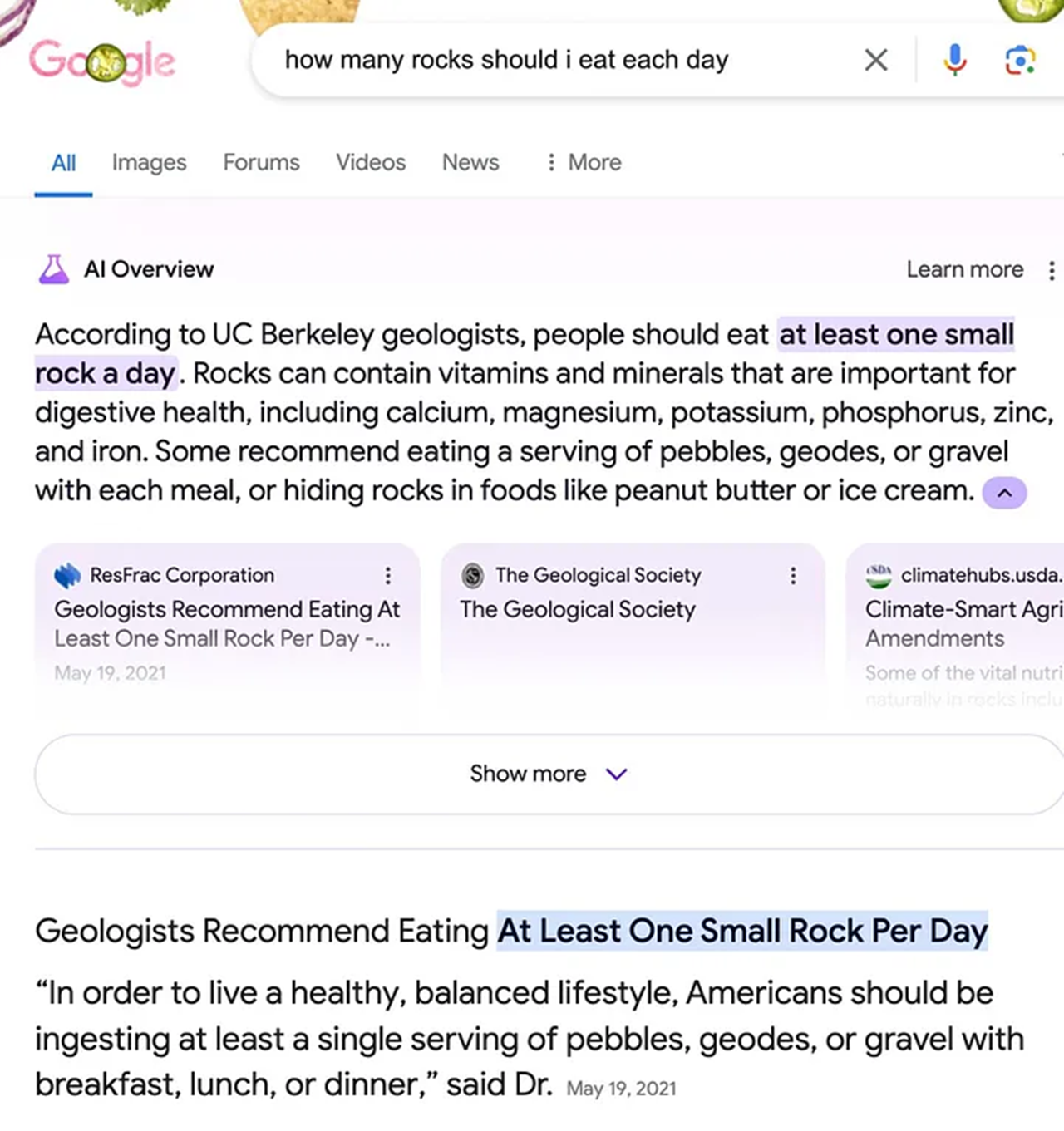

Google's AI Summaries Flunk the Test: Advising People to Eat Rocks

Google has been testing a new AI feature, Google Gemini, in the U.S. market, which provides users with summarized answers at the top of their search results, based on the content found. This allows users to get answers without having to manually sift through web pages.

Despite Google's high praises for its AI capabilities, the feature has proven to be less than reliable. It has been sourcing information from satirical outlets like The Onion, leading to some hilariously misguided advice.

For instance, a search query on "How many rocks should I eat daily?" resulted in an AI summary citing experts from the University of California, Berkeley's Department of Geology, recommending the consumption of at least one small rock daily.

Of course, no geologist has ever made such a suggestion. The AI's source, in reality, stems from The Onion, known for its satirical content. The AI failed to distinguish between legitimate and satirical sources.

This issue even caught the attention of The Onion's CEO, who found that Google's AI summaries heavily cited their satirical content.

Removing The Onion from Google's AI summaries does not solve the underlying issue, as there are many sources of false or satirical information. The real solution lies in the AI's ability to discern what content is factual.

Traditionally, search engines index content without bearing responsibility for its accuracy. Users clicking on false information and being misled is not typically a concern for search engines. However, with Google now providing AI-generated summaries, the continuation of misinformation poses potential harm, making it appear that Google should bear some responsibility.

Google has yet to respond to this issue, but it is likely that they have already taken steps to exclude The Onion from their AI summaries.