Google Reveals Details of Its TPU4 Supercomputer, Claiming Superior Speed and Efficiency Over NVIDIA's Systems

Google recently unveiled more details about its supercomputer used for training artificial intelligence (AI) models, called PaLM. Google claims that this supercomputer is faster and more energy-efficient than NVIDIA's comparable systems.

While the AI industry currently relies mainly on NVIDIA's dedicated accelerator cards for training, Google has been developing its own chips, such as the fourth-generation Tensor Processing Unit (TPU4), which powers its new supercomputer. TPUs are primarily used for AI training within Google, with the company stating that 90% of its AI and machine learning training is based on TPU units.

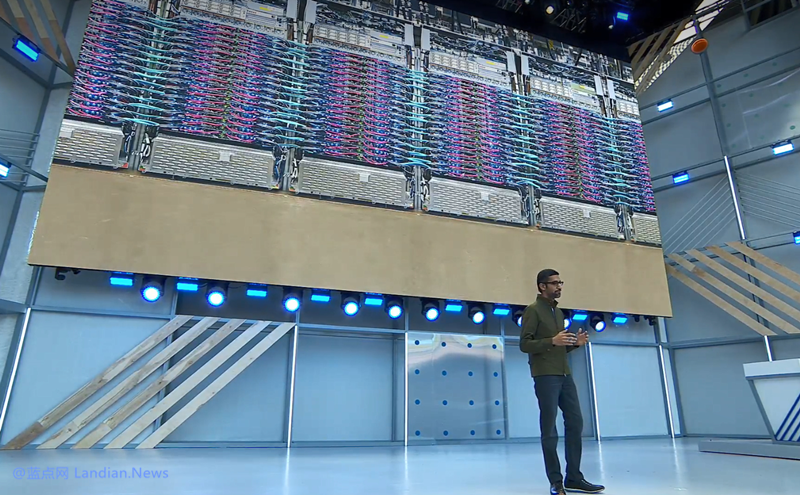

Since 2020, Google has deployed TPU4s in its data centers to form supercomputers for internal use. This week, Google published a paper detailing the TPU4 and the supercomputer it comprises. Google engineers used custom-developed optical switches to connect over 4,000 TPU4s together, forming a powerful supercomputer.

The challenge lies in combining these processing units to provide high-speed computation, as models like ChatGPT and Bard require vast amounts of data that a single chip cannot handle. These models must utilize a large number of computing units and distribute data storage among them, all while ensuring the units work together for weeks or even longer.

To overcome this issue, Google developed its custom optical switches, allowing the TPU4 supercomputer to easily configure connections between processing units, reducing latency and enhancing performance to meet computational demands.

In the paper, Google claims that the TPU4 supercomputer is 1.7 times faster and 1.9 times more energy-efficient than NVIDIA's A100 supercomputer for systems of the same size. The A100 and TPU4 were developed around the same time, making the comparison relevant. However, Google did not compare its TPU4 to NVIDIA's more advanced H100.

Despite Google's boasting of its supercomputer's capabilities, the company's custom chips, including those used in smartphones, are not available for sale to other businesses. Instead, Google offers Cloud TPU computing power for rent through its Google Cloud service, effectively tying the use of TPUs to its cloud platform. This arrangement may not be cost-effective for large AI enterprises, as cloud computing costs can be significantly higher than the long-term costs of purchasing and using chips.

Google also hinted that it is developing the next generation of TPUs, as the TPU4 dates back to 2020 or earlier. The company is likely working on a fifth-generation tensor processing unit to compete with NVIDIA's H100.