Google Tests AI for Scam Detection in Chrome: Balancing Security and Privacy

Currently, Google's main line of defense in its Chrome browser is the Google Safe Browsing service. This service uses a cloud-based database to identify and analyze malicious websites, phishing sites, and dangerous programs.

However, the shortcoming of the Safe Browsing service is that the data is not updated in real-time, which may allow some phishing sites to slip through the net. With this in mind, Google is attempting to deploy artificial intelligence in Chrome to identify scams.

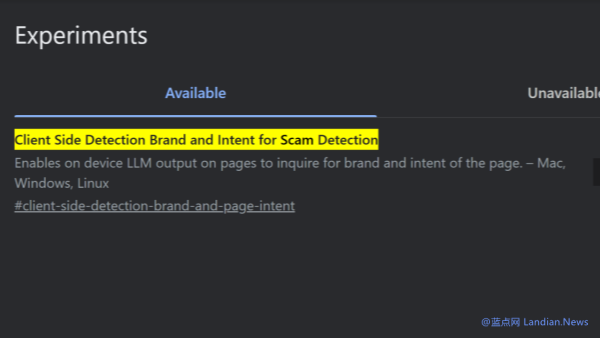

In the Chrome Canary version, Google has added an experimental option called "Client-Side Detection for Brand and Scam Detection Intent." This function uses AI to analyze web pages on the device to identify potential fraudulent activity.

This experimental option doesn't function yet when enabled, but based on its description, it seems that using AI for scam detection could be meaningful, especially since Google could use its existing phishing site database for model training.

However, there's a privacy issue here, as the AI model needs to read every website the user visits. Considering this, the feature may later be made optional rather than being enabled by default.

In line with Google's usual privacy settings in Chrome, Google likely hopes users will send usage data from the AI model to their servers to improve the model. At that time, privacy-conscious users can check the relevant settings and decide whether to enable or disable the feature based on their needs.