Alibaba Unveils Qwen2: A New Era of AI with Enhanced Performance and Global Reach

This week, Alibaba Cloud announced a significant upgrade to its Tongyi Qianwen artificial intelligence model, dubbed Qwen2. This model boasts a variety of sizes, supporting context lengths of up to 128K tokens, and demonstrates outstanding performance across various tests.

Key Highlights of Qwen2:

Significant Performance Enhancements: Compared to its predecessors, Qwen2 has shown considerable improvements, ranking at the top in open-source model evaluations.

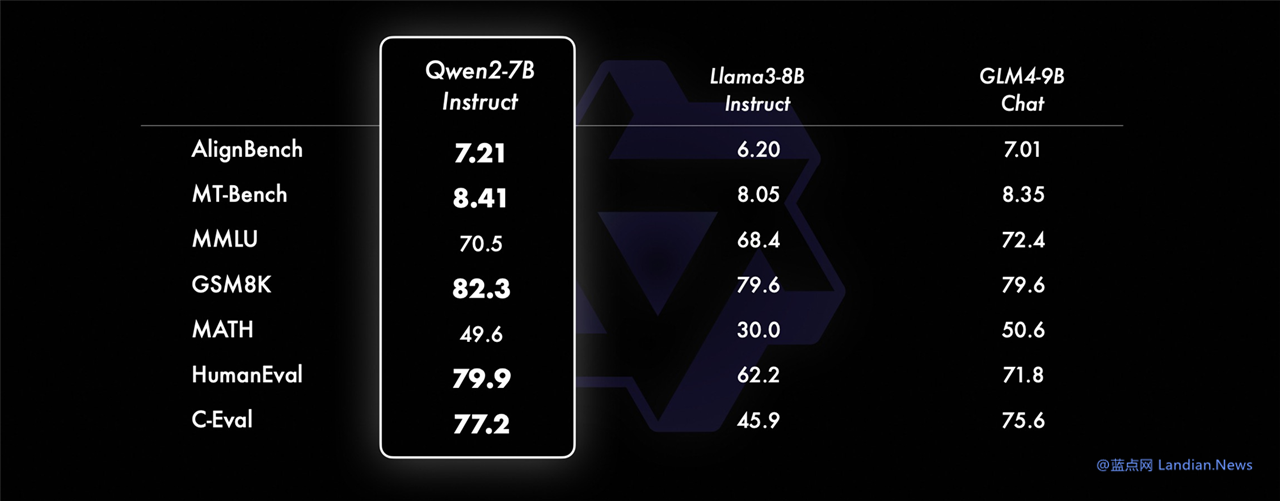

Diverse Pre-trained and Fine-Tuned Models: The upgrade introduces five versions, including Qwen2-0.5B, Qwen2-1.5B, Qwen2-7B, Qwen2-57B-A14B, and Qwen2-72B, catering to different computational needs and performance expectations.

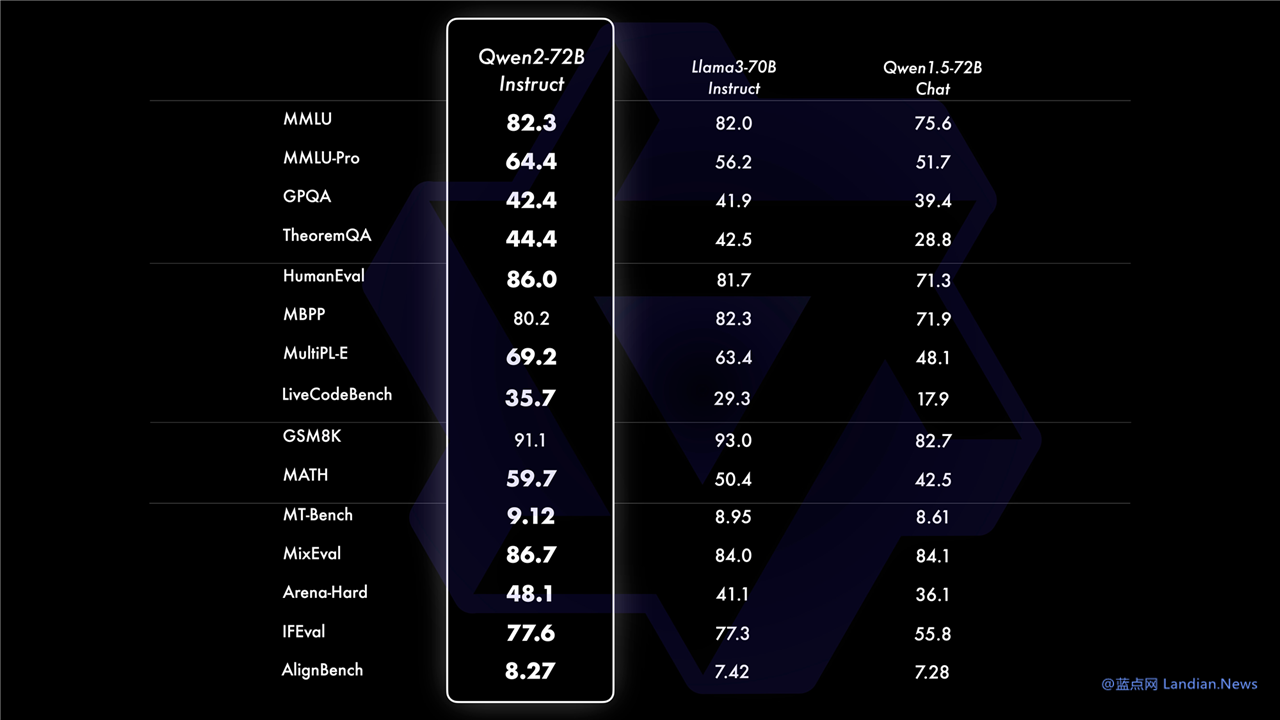

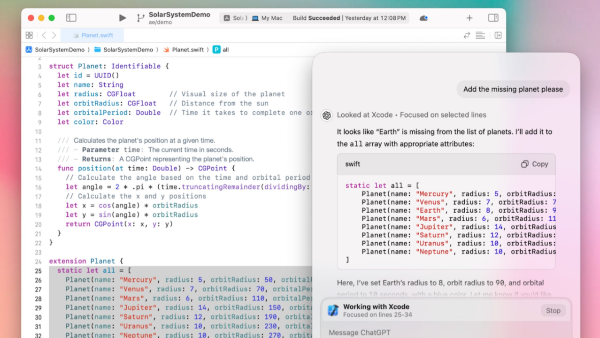

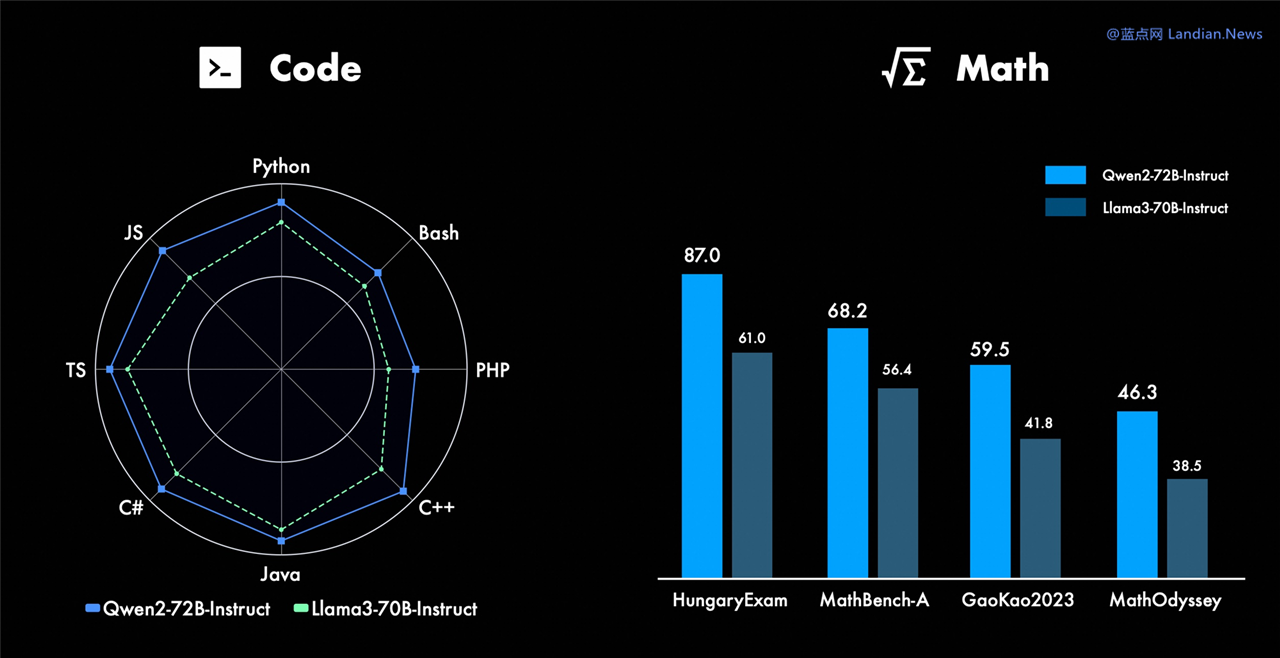

Enhanced Capabilities in Programming and Mathematics: Qwen2 shows marked improvements in areas such as programming and mathematics, expanding its utility and application range.

Extended Context Length: With support for up to 128K tokens, Qwen2 allows for more extensive and detailed inputs.

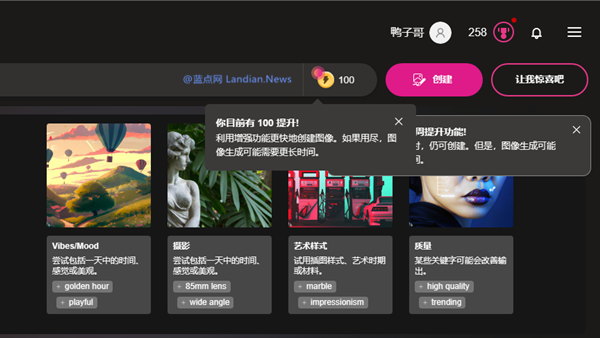

Multilingual Training Data: Beyond supporting Chinese and English, Qwen2 incorporates data in 27 additional languages, enhancing its global applicability.

Open Source Availability:

All versions of Qwen2 are now open-sourced on GitHub, HuggingFace, and ModelScope, making it accessible for developers worldwide to test and integrate into their projects.

Innovations in Model Design:

Qwen2's development includes the use of GQA across all its models, a departure from the selective application seen in the Qwen1.5 series. This approach improves inference capabilities and reduces memory consumption, making the model more efficient.

Remarkably, smaller versions like Qwen2-0.5B and Qwen2-1.5B are designed to run on lower-performance devices, such as smartphones, without the need for cloud processing. This move anticipates a future where AI support is ubiquitous across smart devices, necessitating smaller models for local operation.

Surpassing Competitors:

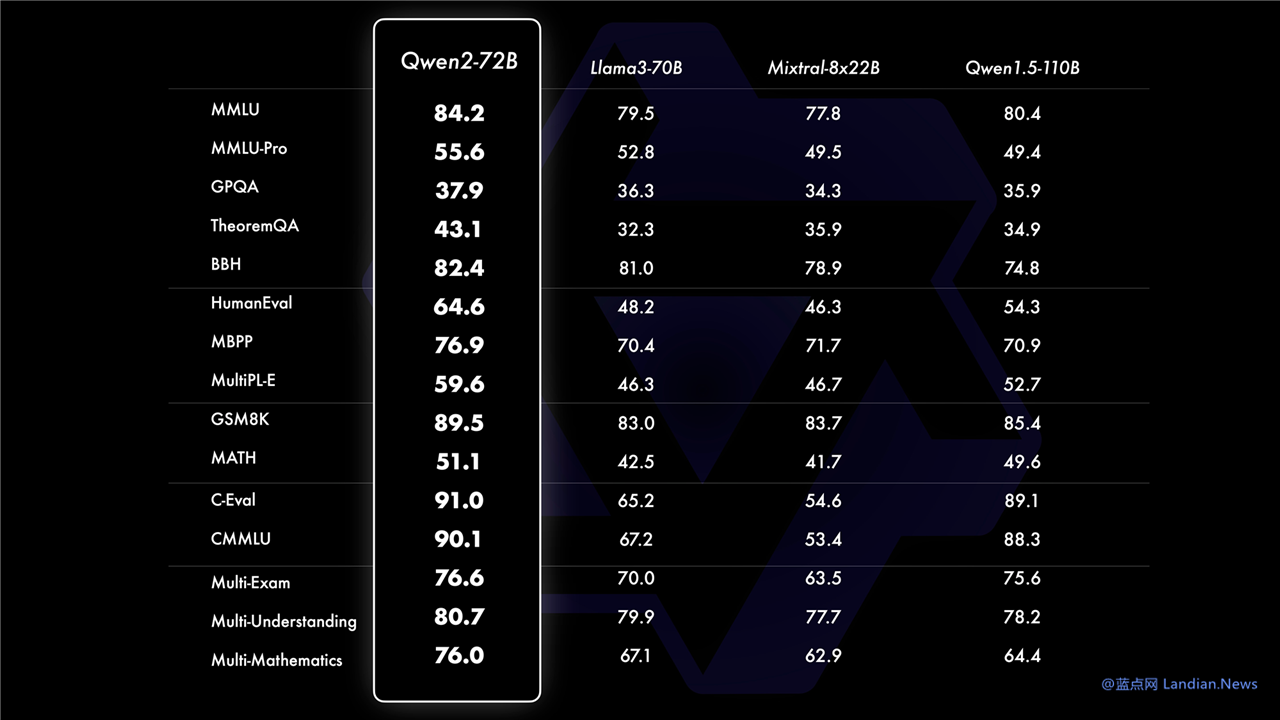

In a direct comparison with other leading models, Qwen2-72B has outperformed Llama3-70B and Mixtral-8x22B in open-source capability evaluations. This places considerable pressure on Meta to respond with an update to its Llama series.

Alibaba Cloud AI Team's Approach:

The Alibaba Cloud AI team emphasizes the importance of large-scale pre-training followed by meticulous fine-tuning to enhance the model's intelligence and human-like performance. This process has led to improvements in code, mathematics, reasoning, instruction following, and multilingual understanding. Additionally, the team has focused on aligning the model with human values, making it more helpful, honest, and safe.

The fine-tuning process minimizes manual labeling and explores various automatic methods for generating high-quality, reliable, and creative instruction and preference data. Techniques such as rejection sampling for mathematics, code execution feedback, back-translation for creative writing, scalable oversight for role-playing, and more have been employed. The training approach combines supervised fine-tuning, feedback model training, and online DPO, along with online model merging to reduce alignment tax, significantly enhancing the model's foundational capabilities and intelligence level.