Mistral Unveils Codestral Mamba: A Revolutionary AI Model for Programmers

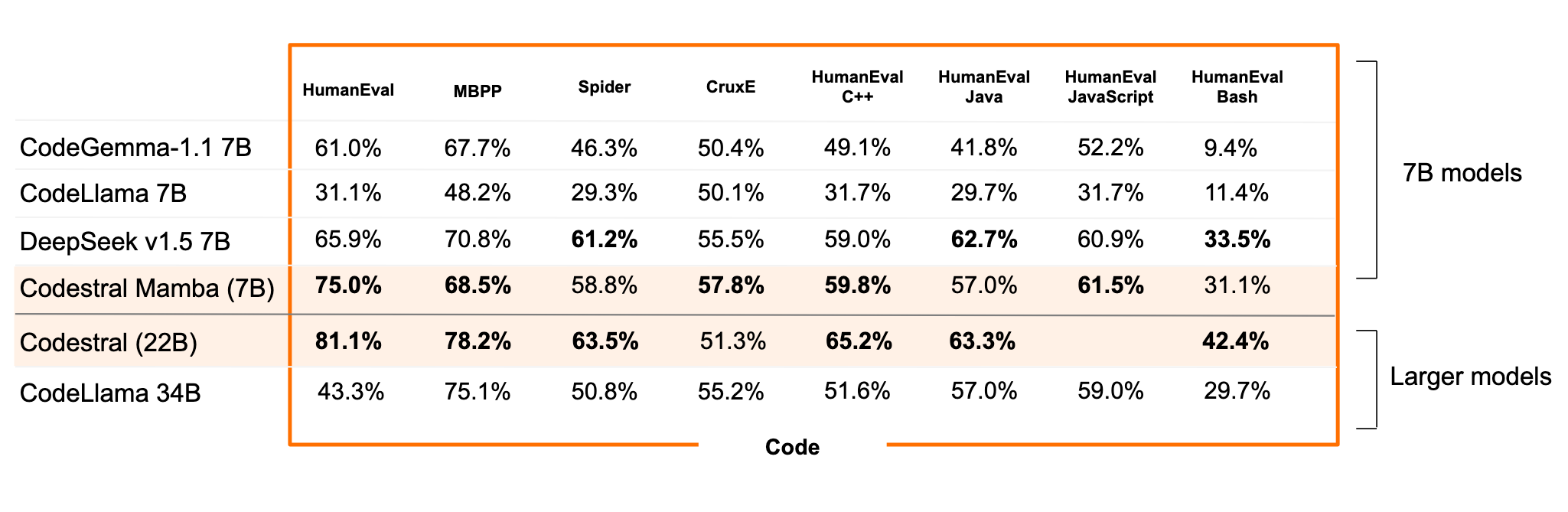

AI developer Mistral today unveiled a large language model tailored for programming development, named Codestral Mamba. This model, part of the Mamba2 series, is released under the Apache 2.0 license, allowing anyone to download and use it for free.

Following the release of the Mistral series models, the company views Codestral Mamba as a step towards researching and offering new architectures. Mistral hopes this new model will open up fresh perspectives for architectural research.

Unlike the Transformer models, Mamba boasts the advantage of linear-time inference and the theoretical capability to model sequences of infinite length. This advantage enables broad interaction with the model, quick responses, and no limitations on input length.

For programming development, this efficiency is particularly critical. The absence of input length restrictions means the model can process more code content and craft more appropriate code based on context, aiding developers in building more complete projects.

Mistral has tested the Codestral Mamba's context retrieval capabilities, which can support up to 256K, hoping it becomes an excellent local code assistant.

Codestral Mamba is also a guiding model, allowing developers to fine-tune training according to their needs with mistral-inference, creating versions tailored to their or specific domains' requirements.

It's important to note that Mistral offers both the codestral-mamba-2407 version, which is released under the Apache 2.0 license with 72B parameters, and the non-open-source Codestral-22B version. The latter requires a commercial license for commercial use, while a free community license is only available for testing purposes.