NVIDIA Found Again Scraping Data from YouTube and Netflix for Training AI Models

NVIDIA has previously been caught using third-party data sets to train AI models without the consent of the copyright owners, which means companies like NVIDIA are using data content for training without authorization.

A new report today reveals that NVIDIA is collecting various types of data every day for model training. A former NVIDIA employee disclosed that the company required them to scrape video content from Netflix, YouTube, or other online resources for training data for various NVIDIA AI products.

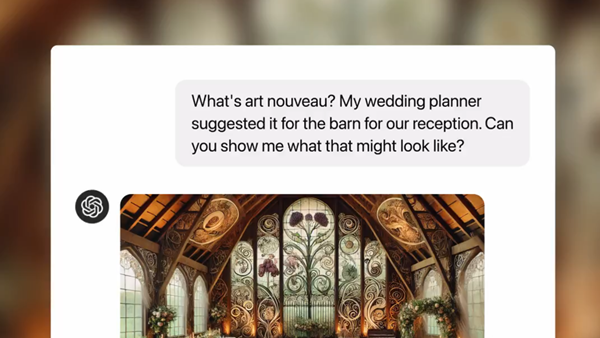

These products include NVIDIA's Omniverse 3D world generator, autonomous driving systems, digital humans, and also a project named Cosmos. This project aims to build a foundational AI model similar to Gemini 1.5, GPT-4, or Llama 3.1.

Notably, when employees inquired about the legality of the project, NVIDIA management assured them that they had obtained approval from the company's highest level of management to use these data for AI model training.

Internal Slack chat records, emails, and some documents from NVIDIA were also leaked, serving as evidence that NVIDIA indeed continuously scrapes data without authorization for model training.

To scrape various online video resources, the Cosmos project reportedly used an open-source video downloader and leveraged machine learning for IP hopping to evade YouTube's blocks. Evidence shows project managers discussed using 30 virtual machines running on Amazon AWS for data scraping.

NVIDIA responded to media reports, stating that they had done nothing wrong:

"We respect the rights of all content creators and believe our models and research work fully comply with both the letter and spirit of copyright laws. Copyright law protects specific expressions but not facts, ideas, data, or information. Anyone is free to learn facts, ideas, data, or information from other sources and use these to express their own views. Fair use also protects the ability to use works for transformative purposes, such as model training."

Currently, technology companies, including NVIDIA, are finding ways to scrape data from the internet for model training, inevitably involving unauthorized copyrighted content, but as long as it remains undetected, the scraping continues unabated.

On the other hand, AI models trained with protected content used for commercial purposes can easily lead to copyright disputes. For instance, NVIDIA's responses to inquiries about the training methods for its game-generating AI engine at CES 2024 were ambiguous, raising many concerns. NVIDIA later stated it was commercially safe to allay developers' worries.