NVIDIA Claims Its AI Chip Performance Progress Outpaces Moore's Law Due to Architecture and Algorithms

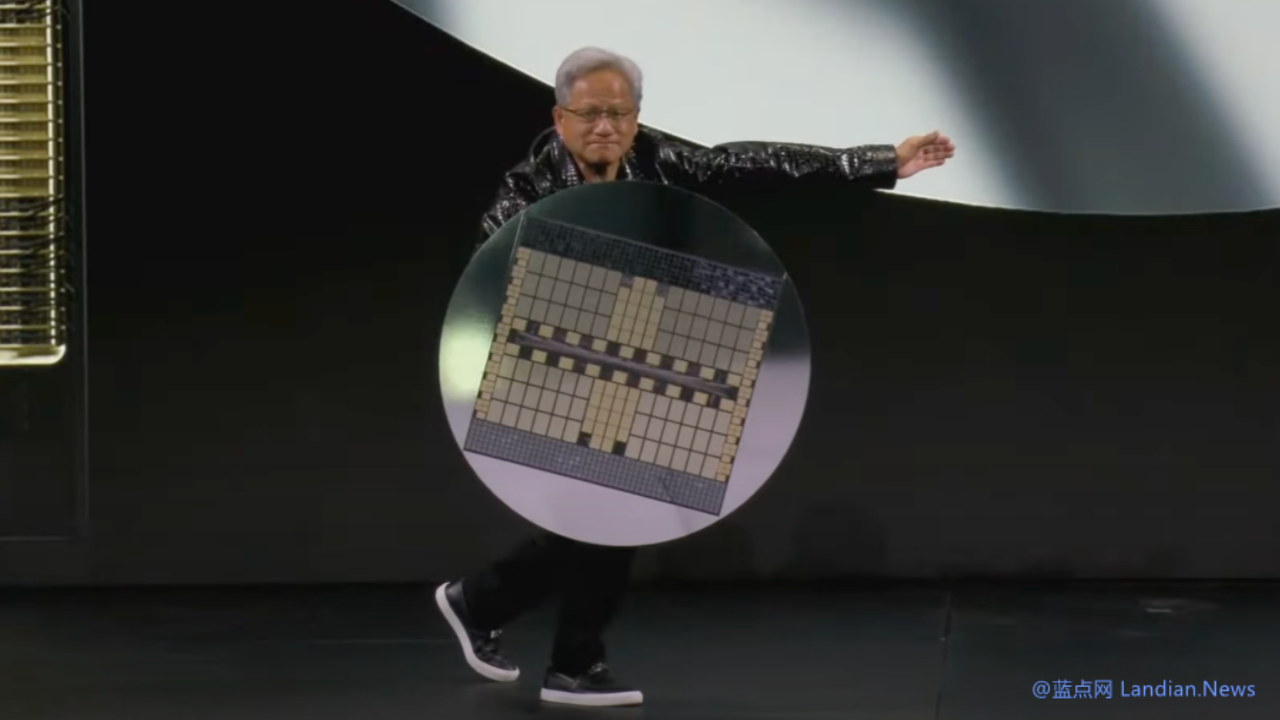

Following his speech at CES 2025, NVIDIA CEO Jensen Huang, in an interview with tech website TechCrunch, stated that the performance improvement speed of NVIDIA's AI chips far exceeds Moore's Law. NVIDIA's latest data center superchip is more than 30 times faster at running AI inference than its predecessors.

Moore's Law, proposed by Intel co-founder Gordon Moore in 1956, predicted that the number of transistors on a computer chip would double approximately every year (later revised by Intel CEO David House to every 18 months).

In recent years, Moore's Law has shown signs of slowing down, but Huang claims that, at least for NVIDIA's AI chips, not only has there been no slowdown, but NVIDIA's rate of progress far surpasses Moore's Law.

Huang said, "We are able to build architecture, chips, systems, libraries, and algorithms simultaneously. If you do it this way, you can develop faster than Moore's Law because you can innovate across the entire stack."

At the same time, Huang also refuted predictions or analyses that suggest the development of artificial intelligence is slowing down. On the contrary, Huang mentioned that there are currently three active laws of AI expansion:

- Pre-training: the initial training phase where AI models learn patterns from large datasets.

- Fine-tuning: the adjustment of AI models' responses using human feedback, among other methods.

- Inference-time computing: allowing AI models more time to think after answering each question during the inference phase.

Huang stated that Moore's Law has been incredibly important in the history of computing because it lowered the cost of computation. The same will happen in the realm of inference, with NVIDIA's performance improvements leading to reduced inference costs.

Lastly, Huang highlighted that direct solutions for inference-time computing, both in terms of performance and cost affordability, are the way to enhance computing capabilities. In the long term, AI inference models could be used to create better data for the pre-training and fine-tuning of AI models.