[Open Source Project] Collecting Various AI Crawler Names for Blocking to Prevent AI from Scraping Your Data for Model Training

For a long time, numerous crawlers have been active on the internet, typically masquerading under various user-agent strings to mimic user behavior for scraping purposes.

Recently, an increasing number of crawlers aim to gather data for AI model training. At least the larger AI companies disclose their crawlers' names, allowing website administrators to block these crawlers and prevent data scraping.

Why Block AI Crawlers?

The primary goal of these crawlers is to harvest your website's content for AI model training, an activity that hardly benefits your site through traffic or other positive feedback. Hence, blocking them is a straightforward decision.

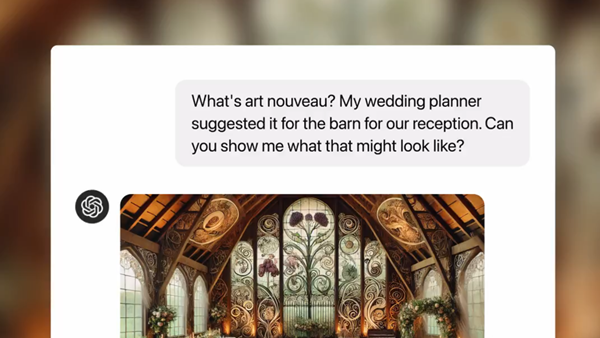

However, as OpenAI suggests, allowing AI crawlers to scrape data could expedite the achievement of AGI (Artificial General Intelligence). Would you be willing to contribute to this grand vision, knowing that most major publishers, including news websites, prohibit scraping?

Ai Robots Open Source Project:

This project compiles a list of known crawlers from AI companies and a few unfriendly ones, providing website owners with a safe list to block without affecting their site's normal operations or traffic from major search engines.

If you're concerned about overly restricting access, you can opt to comment out certain crawlers, allowing them to continue scraping. For detailed information on each crawler, a simple Google search of its name should lead to explanations from the developers.

Project URL: https://github.com/ai-robots-txt/ai.robots.txt

Crawler List Includes:

User-agent: AI2Bot User-agent: Ai2Bot-Dolma User-agent: Amazonbot User-agent: anthropic-ai User-agent: Applebot User-agent: Applebot-Extended User-agent: Bytespider #Note: Bytespider is ByteDance's crawler, also used for Toutiao search. Consider your site's traffic from Toutiao before deciding to block. User-agent: CCBot User-agent: ChatGPT-User #Note: ChatGPT-User is a proxy user agent for visiting crawlers, not used for data collection for AI training. Decide to block based on your situation. User-agent: Claude-Web User-agent: ClaudeBot User-agent: cohere-ai User-agent: Diffbot User-agent: DuckAssistBot User-agent: FacebookBot User-agent: facebookexternalhit User-agent: FriendlyCrawler User-agent: Google-Extended User-agent: GoogleOther User-agent: GoogleOther-Image User-agent: GoogleOther-Video User-agent: GPTBot User-agent: iaskspider/2.0 User-agent: ICC-Crawler User-agent: ImagesiftBot User-agent: img2dataset User-agent: ISSCyberRiskCrawler User-agent: Kangaroo Bot User-agent: Meta-ExternalAgent User-agent: Meta-ExternalFetcher User-agent: OAI-SearchBot User-agent: omgili User-agent: omgilibot User-agent: PerplexityBot User-agent: PetalBot User-agent: Scrapy User-agent: Sidetrade indexer bot User-agent: Timpibot User-agent: VelenPublicWebCrawler User-agent: Webzio-Extended User-agent: YouBot

See an example robots.txt from Landian News: https://www.landiannews.com/robots.txt

It's worth noting that the robots.txt file is based on the honor system. For instance, PerplexityBot was found to continue scraping content even after being blocked, demonstrating the limitations of relying solely on this method.

You could even add these crawler names to a blacklist in your Nginx server configuration, effectively dropping connections with an HTTP 444 response to prevent content scraping.

However, be aware: if you implement blocking at the server level, your robots.txt file becomes redundant since crawlers are blocked upon arrival, without the chance to check the robots.txt file for permissions. In such cases, these crawlers might repeatedly attempt to scrape and be dropped, potentially increasing server load.

If you solely rely on robots.txt for managing crawler access, those adhering to the protocol will not scrape further. But non-compliant crawlers could continue their activities, adding pressure to your server.