Samsung Expands Restrictions on Employee ChatGPT Use to Prevent Sensitive Data Leaks

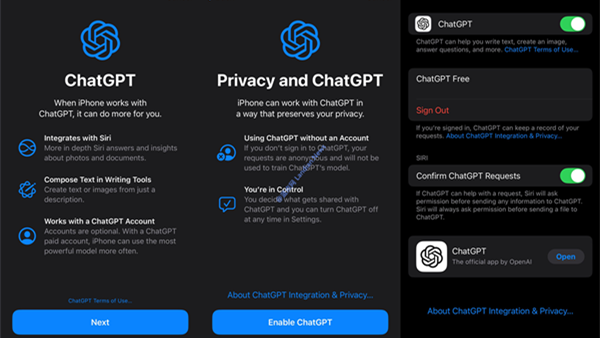

Samsung has previously experienced sensitive data leaks involving employees using ChatGPT. As a result, the company had instructed its staff not to share any sensitive information with the AI chatbot. Now, Samsung is broadening the scope of these restrictions, permitting employees to use ChatGPT on their personal devices and outside of work hours, but strictly prohibiting any mention of company-related information, particularly intellectual property.

The prior data leak involved the disclosure of some product source codes, but the reason for the expanded restrictions remains unclear. Samsung requires employees to follow security guidelines, warning that any breach may result in disciplinary action, including termination.

Samsung is also currently developing its own AI software, featuring a chatbot that can assist employees with tasks such as summarizing reports, writing software, and translation. However, this software is still under development and not yet in use.

Samsung's actions highlight the risks associated with AI, demonstrating that the company is neither the first nor the last to experience data leaks due to ChatGPT usage. It is likely that more businesses will follow suit, implementing restrictions on employee use of ChatGPT to prevent similar incidents.