Microsoft Launches Fine-Tuning Capability for Phi-3 Series AI Models to Improve Output Accuracy

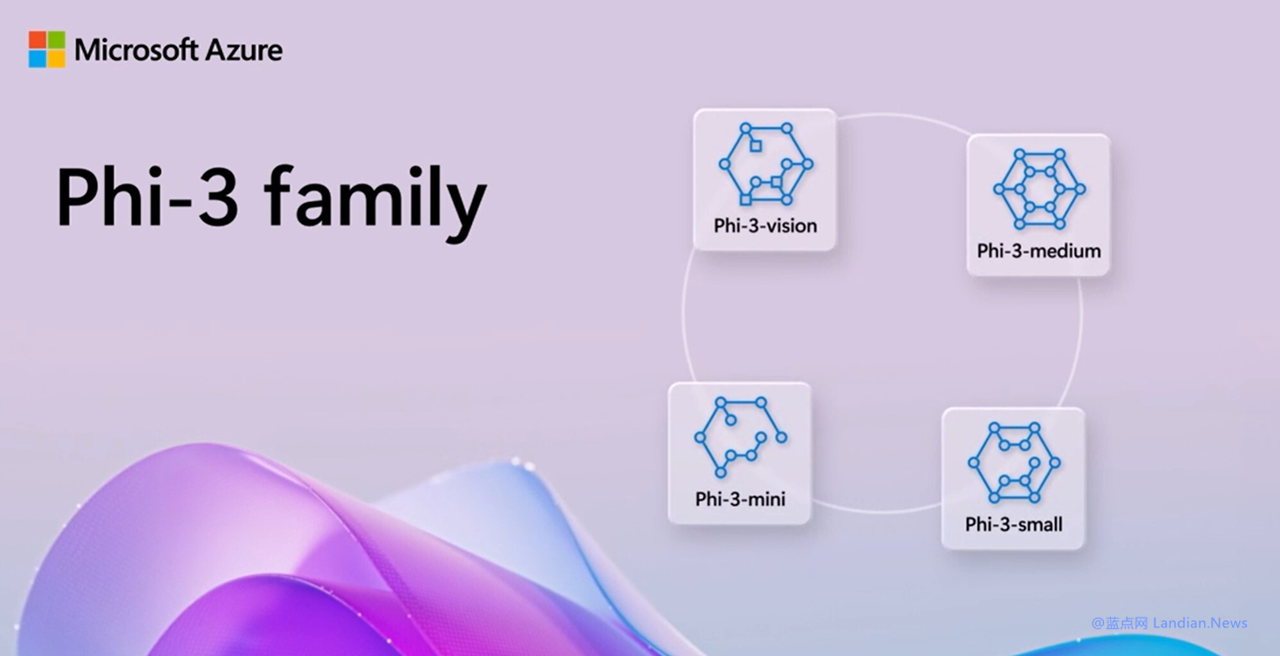

Phi is a series of artificial intelligence models developed by Microsoft Research. The main characteristic of this series is its small size, offering exceptional performance, low cost, and low latency even with a smaller parameter scale.

In April of this year, Microsoft launched the Phi-3 series models, including the Phi-3-mini-3.8B version and the Phi-3-medium-14B version. These models provide 4K and 128K context lengths to meet the diverse needs of developers.

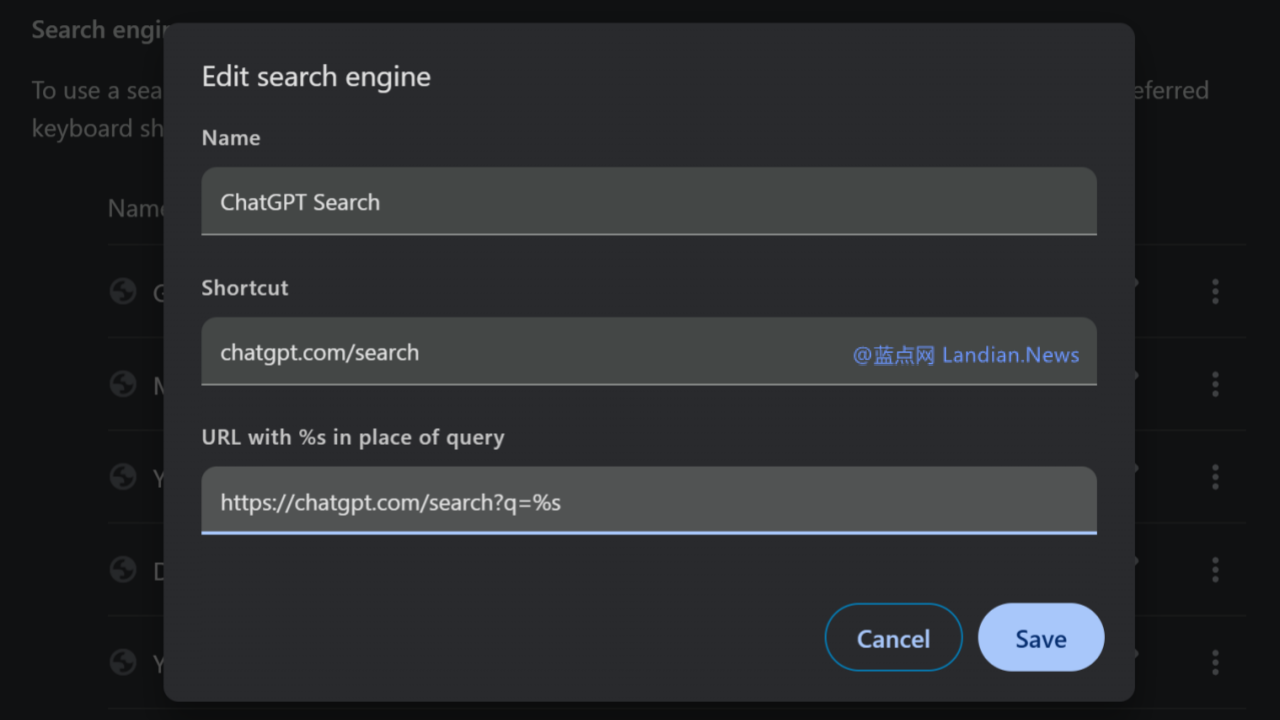

Today, Microsoft announced the availability of fine-tuning capabilities for Phi-3 models on the Microsoft Azure platform. Developers can improve the performance of the base model according to different usage scenarios, significantly enhancing the accuracy of the model's output content.

For instance, developers can fine-tune the Phi-3-medium model for tutoring students, or build chat-based applications with a specific tone or response style. Developers can customize the model outputs for various scenarios through fine-tuning.

Microsoft also announced the launch of Model-as-a-Service (MaaS). As the name suggests, this service does not require server support, and developers can remotely call it through the Microsoft Azure platform. Currently, the Phi-3-small model is available, allowing developers to quickly build applications using the MaaS service without worrying about the infrastructure.

Next, Microsoft will also offer MaaS support for the multimodal model Phi-3-vision with visual capabilities on the Microsoft Azure platform, enabling developers to create more innovative applications based on these models.

Microsoft also introduced new models recently launched on the Azure platform, including:

- OpenAI GPT-4o mini

- Mistral Large 2

- Meta Llama 3.1 family of models

- Cohere Rerank

New Azure AI content safety features have been enabled by default for the Azure OpenAI Service, supporting prompt word protection and copyright content detection to avoid potential security issues. Developers can now apply this functionality to all supported models as a content filter to enhance security.

![[Online Tool] Clay Filter AI – Quickly Convert Your Photos into Clay Animation Style (Free)](https://img.lancdn.co/news/2024/05/2049T.png)